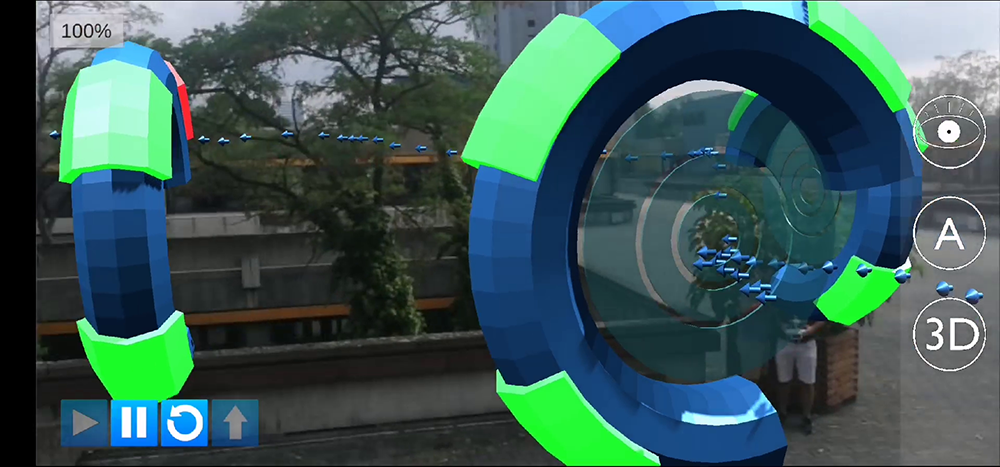

INTERGEO 2019 saw the debut of numerous products along with notable spotlights and updates. It also explored what the intelligent future of cities actually looks like, where innovations AR will change the way people interact with their environments. Unfortunately, some of these innovations are limited by their hardware, but what would happen if a camera that wasn’t tied to the device? That’s the question that the people behind DronOSS asked and have answered.

The team as DronOSS came out to INTERGEO to talk about ARbox, which is a cross-platform AR solution that is not physically tied to a device. They’ve designed ARbox to give users a truly unique AR experience no matter where they’re located or what they’re using to connect to the device.

Luciano Mora is the co-founder of DronOSS and is working to change perceptions around what users should expect out of AR. We caught up with him to talk about the opportunities that ARbox is designed to open up, what kind of environments the product might be best utilized in, how they’re looking to change expectations around training for drone pilots and much more.

Jeremiah Karpowicz: What led you to develop an augmented reality system for drones and robots? Was it more about the capabilities of the technology, or an opportunity in the market?

Luciano Mora: Well, I would say a bit of both. We had been working on another drone-related project for some years that due to external factors got canceled, and thanks to it we realized the potential of using Augmented Reality from the point of view of the drone. That is what we ended up calling Remote Augmented Reality.

We then checked how that technology could be applied in the drone ecosystem, if there was a need and a niche for it and it is when we decided that would be a perfect technology for training pilots and entertainment.

We had the know-how, the idea and the market was there, waiting for us. That is why we decided to found DronOSS, got funded by the German government and started developing the ARbox. Well, to tell the truth we had to work on the first prototypes and the idea for one year on our own before we got the funds we needed.

I wasn’t an easy year but when people started trying our first prototype the feedback was so positive that kept us working until we got the funds. Pilots were impressed when they saw the 3D elements floating in the air and they started flying through them and around them using a real Phantom 2 drone. I think the fact that they could interact with those 3d elements in real-time was what impressed them the most. And those first prototypes used only GPS for the positioning, SLAM would be implemented around a year later.

Why is it so important that the AR capabilities of DronOSS are not tied to a device? What kind of opportunities can be enabled on account of this feature?

By not being tied to one specific device, our Remote Augmented Reality system can be combined with any moving (drone, robot, RC car, RC boat) or static platform. This way, anyone with such a platform capable of carrying the weight of the ARbox (our current prototype weighs around 100 g) could integrate AR features to their device. Users have a higher degree of freedom, being able to use their own drone to experience Remote Augmented Reality.

We focused on drones because we see a huge potential in them for the next years, but letting drivers interact with AR, visualize it remotely, in real-time and on top of an HD video stream from the point of view of the remote camera, has potential for much more. Just imagine it applied, for example, to a Mars Exploration Rover. You could have waypoints, real time information or POIs on top of the video feed.

Tell us more about that concept of Remote Augmented Reality. How and why is this feature unique?

Current AR devices and applications are mainly focused on the user’s location and immediate surroundings. With our Remote Augmented Reality system, we basically take the camera and sensors out of the usual AR system (AR glasses, smartphone or tablet) and put them somewhere else at a remote location (e.g. on a drone). Being able to locate the camera (with GPS and/or Simultaneous Location and Mapping [SLAM]), we can tell our software (which is installed on the client device held by the drone pilot, i.e. a tablet, smartphone or computer) at which other location we want to place a virtual element.

That can, for example, be a virtual wind turbine, a building, a bridge or a whole facility in 3D. That allows pilots to practice how to perform inspections using a real drone but the environment can be changed in a matter of seconds. You don’t need to have the real windmill, and also you don’t risk damaging it with the drone. When we fly with the drone, equipped with our ARbox, to said location and point its camera to the location where we placed the wind turbine, you see the later, or any other 3D element we implement in the app, inside the video feed, as if it were a real wind turbine.

Are there limitations around the kinds of drones that your hardware can be mounted onto?

Our system is independent from the drone platform. This means that there is no hardware connection between the drone and the ARbox. So, our Remote Augmented Reality system could be used as a stand-alone system (always in combination with a smartphone, tablet or computer to visualize AR).

The only limitation is the payload capacity of the drone. If the drone is able to carry the weight of the ARbox, nothing stands in the way of mounting it onto that drone. Of course, depending on the drone, we’d have to figure out the best way to mount the ARbox onto it. A gimbal is the best option, since the transmitted image from the ARbox camera then always stays horizontal and stable.

What are some of the specific scenarios or applications that you’re especially excited to partner with users or developers in order to enable?

In the long run, we want to be the leader in training technology for commercial UAV piloting. So, users of our Remote Augmented Reality system should be able to train and prepare for any type of mission. We want to become standard for examination of drone pilots, since using AR, a proofer would have a very reliable and precise source to determine how well the student has flown. Remember that collisions of the drone with the 3D elements are detected in real-time. Considering the increase in drone applications and pilots as well, we expect that in the future, drone pilots will need to pass a practical exam in order to acquire the drone license.

Primarily, we are thinking of training for firefighters, inspections, urban planning, agriculture and security/surveillance. They will be able to train for real situations with our system anywhere where there is space to fly a drone without risking harm to drones, assets or firefighters.

Another user group that will benefit from our system are professional drone pilots who perform inspections. Having Augmented Reality, they will get more information on their real-time video feed to be able to take better-informed decisions more quickly.

What’s the best first step for a company or developer that wants to create one of the scenarios or training environments that we’ve been talking about?

Anyone who sees the potential in our solution is welcome to reach out to us to discuss possible ways to achieve adoption. Currently, we are looking for a corporate partner or pilot customer who understands the potential of our technology and is willing to help us bring our current prototype to an MVP.

Comments