Contributed by Kevin Andrews, Trimble Applanix

The ambitious advances made under the umbrella of autonomy have provided the survey, construction, and even commercial markets with a massive edge in recent years. Much like the space race more than 50 years ago provided real-world advances, autonomous vehicle development has jump-started the way spatial data is collected and processed.

It's not one advancement that is driving greater possibilities – it's a fusion of sensors – a practice that has broadened considerably in recent years. What used to be a designation largely focused on hardware and algorithms (think Kalman filters) is now much more comprehensive to better meet greater amounts of data gathered and growing demand for high-accuracy mapping.

With today's more advanced tools, there's considerable focus on data collection workflows, on the ability to use all available data with speed and accuracy to get to a high-quality result.

In essence, like nearly all technology-enabled practices, we're seeing an evolution of traditional survey techniques being made smarter, more portable, and less expensive.

Beyond Convention

Now, the combination of increased processing power and highly sophisticated algorithms is taking traditional methods to almost unrecognizable capability levels.

These advancements, in turn, are leading to some perhaps unexpected solutions that range from bicycle-equipped pavement management systems to semi-autonomous surface/subsurface robots. Geospatial data gathering with technology such as lidar is even available on some smartphones.

What does this mean to the survey industry? Sensor fusion is all about taking conventional survey practices and condensing them into powerful workflows that help collect better data, in more places, with less effort.

A SLAM Dunk

Recent advancements in computing power have accelerated the evolution of sensor-focused solutions. Algorithms have become more refined and efficient, which leads to enhanced performance.

As these technologies continue to evolve, their applications multiply. At the heart of many of today’s advances is lidar, a technology developed more than 50 years ago. Multi-sensor fusion using the most popular three types of sensors (i.e., visual sensor, lidar sensor, and inertial measurement unit (IMU)) is becoming ubiquitous in simultaneous localization and mapping (SLAM) solutions. This combination allows for the determination of position and orientation within a map while creating a spatial representation of a given environment.

Though lidar SLAM is a relatively new term, the core techniques it borrows from haven't fundamentally changed. Industry professionals are still using traditional survey methods like bundle adjustments and least squares calculations to solve for the location of the mapper while at the same time generating a coherent map. What's different is that the technology is now easier to use, more able to handle larger datasets, less costly, and more versatile.

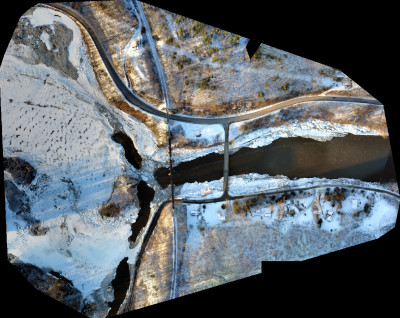

These solutions operate in more dynamic environments, such as mapping roads or underwater surfaces, requiring less on-the-ground, sensor-to-sensor calibration.

Dynamic Conditions

Among the biggest challenges with sensor fusion is error compensation that includes boresight angles, drift, or data acquisition methods.

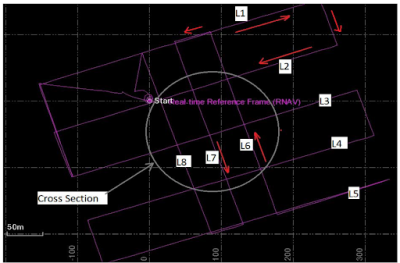

For instance, mobile scanning with lidar typically produces multiple scans of the same object (e.g., curb, telephone pole), because the driver passes the same objects from different lanes or from different directions. Each scan is slightly shifted from the other. Even if the error is within the required accuracy for the mapping system or within the tolerances of the project, these copies are frustrating.

The traditional method for aligning the scans is to treat each pass as a separate but connected point cloud and adjust in 3D space so the differences in the overlap are minimized. It’s time-consuming and not entirely accurate. In mobile mapping, this process produces errors that vary at each point of the pass due to fluctuating GNSS errors. Technicians can reduce the overlap, but never eliminate it completely. For many practitioners, the biggest problem with a network of overlapping passes is determining which dataset is more accurate than others.

Compensation Techniques

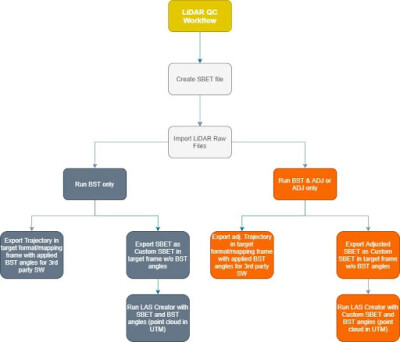

Contemporary sensor fusion tools are emerging that can compensate for these errors and help users achieve the highest level of georeferencing accuracy with lidar sensors. These solutions support boresight calibration between the IMU and lidar sensor, trajectory adjustment, and point cloud generation.

The latest algorithms fuse all measurements together to produce matching point clouds for applications that range from UAVs to land mobile mapping and even indoor surveys. Advanced lidar SLAM solutions break the traditional pick-one-pass concept, greatly improving georeferencing accuracy in areas of poor GNSS coverage and enabling high-accuracy data collections in virtually any environment.

Leveraging Lidar

The primary objective of this technology is to generate homogeneous point clouds from overlapping scenes by leveraging high-quality mapping lidar sensors through the application of the lidar SLAM approach. It essentially fuses all measurements and compensates for errors such as boresight, GNSS-INS inaccuracies, sensor errors, and data acquisition errors.

In areas of overlap, there is no longer any concept of the pass or survey line, so the weighting of passes and deciding which is "correct" is gone. Instead, every single scan line is its own pass, and the "truth" is the theoretical surface being solved for. This only works with a very good understanding of the errors in the navigation system and the GNSS-INS solution. The navigation error model serves as the weighting and constraint for the lidar adjustment. Done right, the user gets both maximum accuracy and precision. Done wrong and unmodeled errors can pull and distort the whole project or burn CPU cycles, failing to find a proper solution.

As an example, a survey team uses a mobile mapping system equipped with two lidar sensors (left/right), a spherical camera, and a GNSS-INS system to map local streets in a town center, which has narrow roads (4-8 m) with buildings up to 12 m, with a distance of about 1 km. In this case, using lidar SLAM techniques, the team was able to improve the absolute vertical accuracy by more than 250%, yielding a performance of 1.4 cm with a maximum residual of 3.3 cm.

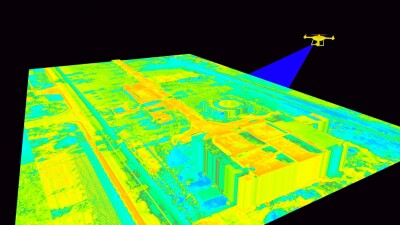

Similar sensor fusion techniques are greatly improving aerial mapping as well.

Aerial Advantage

Using UAVs for mapping has long been plagued by several challenges. Aerial surveys typically require precise initial camera positioning and on-ground targets as well as complex manual calibration and software able to handle data variations.

Again, improvements in sensor fusion – in this case between the IMU and the camera – dramatically improve the ease-of-use and data accuracy. The latest advancements in solutions, such as Applanix’s Camera QC, can calibrate the angular misalignment between the IMU and the camera, as well as camera parameters such as focal length.

In one case, a customer is taking advantage of this automated calibration to process four cameras simultaneously within a single boresight project – instead of previous workflows, where each sensor required individual processing. In addition, the firm’s cartographers leverage the batch processing mode, which generates a single configuration file in XML format. This capability has virtually eliminated the manual project setup traditionally performed for each sensor, streamlining the entire workflow.

Bottom line, according to the customer, these implementation changes have delivered two key benefits: they are able to achieve more precise alignment between sensors while simultaneously reducing total processing time by a significant margin. The combination of improved accuracy and enhanced efficiency has made the technology an invaluable addition to the customer’s aerial data processing pipeline.

Power Race

Whether mapping city infrastructure, managing pavements, or surveying remote locations, today’s sensor fusion advances are all about turning complex data into actionable insights and driving productivity by simplifying data collection and processing, even in challenging conditions.

The biggest challenge for many today is processing power. The massive volumes of data collected by mobile mapping systems are difficult to store and transmit. To hold all of that in one massive adjustment takes both enormous computing power, memory, and optimized, efficient algorithms. The more algorithms and tools advance, the more customers want to push with larger and larger datasets – it’s a continuing balance of power.

Accessible Innovation

The future of mapping isn't about revolutionary technology breakthroughs, but about making powerful tools accessible, efficient, and adaptable to real-world challenges. Sensor fusion represents a fundamental shift from single-sensor solutions to integrated systems that leverage the strengths of multiple data sources while compensating for individual sensor limitations.

As processing capabilities continue to expand and algorithms become more sophisticated, we can expect further convergence of technologies that will make high-precision spatial data collection routine rather than specialized. The combination of improved accuracy, reduced costs, and simplified workflows is democratizing advanced surveying capabilities across industries and applications.

The evolution from traditional survey methods to sensor fusion workflows represents not just technological advancement, but a fundamental change in how spatial data is conceived, collected, and processed. This transformation promises to continue driving innovation across the geospatial industry for years to come.

About the Author

As director of land products at Trimble Applanix, Kevin Andrews leads the team delivering advanced positioning and orientation technologies for reality capture on ground-based moving platforms. He previously served as a strategic marketing manager for Trimble’s autonomous vehicle businesses and as product manager for Applanix land and indoor products.

.jpg.medium.800x800.jpg)

Comments