Effectively utilizing drones for large-scale, time-sensitive missions has proven to be a challenge in various applications of the technology. Collecting a vast amount of data from the air to support anything from linear infrastructure inspections to land surveillance projects to maritime patrol activities is easier than ever, but using that data to quickly make decisions or usefully identify issues is a much different issue.

This challenge is one that Overwatch Imaging was specifically created to address. The company designs and manufactures imaging systems with custom onboard AI software for both piloted and unmanned aircraft. Their solutions have helped organizations like NOAA support flood forecasting and response actions in emergencies. Drs. Suzanne Van Cooten and Robert Moorhead will explore those details at Commercial UAV Expo Americas in October, but their solution has been able to improve efficiencies, reduce costs and enhance safety in a variety of industries for numerous organizations.  As the CEO of Overwatch Imaging, Greg Davis has shaped this visionary application of drone technology to create these real, tangible results. He’s set to talk through this perspective during the Drone Industry Visionaries session at Commercial UAV Expo Americas, but we wanted to get a better sense of what these results look like and how he believes they’ll shape the future of the space before he further details all of this info at the event. We connected with Greg to talk about his previous work at Insitu and Lockheed Martin, asked about what it means to automatically process data in large-scale environments, discussed what he’s looking forward to seeing take shape in the industry and much more.

As the CEO of Overwatch Imaging, Greg Davis has shaped this visionary application of drone technology to create these real, tangible results. He’s set to talk through this perspective during the Drone Industry Visionaries session at Commercial UAV Expo Americas, but we wanted to get a better sense of what these results look like and how he believes they’ll shape the future of the space before he further details all of this info at the event. We connected with Greg to talk about his previous work at Insitu and Lockheed Martin, asked about what it means to automatically process data in large-scale environments, discussed what he’s looking forward to seeing take shape in the industry and much more.

Jeremiah Karpowicz: Can you tell us about the genesis of Overwatch Imaging? Was the company started in response to the need of a particular user, or because of the possibilities that you believed the company could help create?

Greg Davis: Overwatch Imaging was created in response to a gap in the solution space of available technology. This gap is our focus area: we help our users quickly find what they are looking for, in large-area, time-critical environments. We saw the explosion of new technology around commercial drones, automation

How has your experience at Insitu and Lockheed Martin impacted the way in which you’re leading the company?

I really value the experience I gained with those companies. The project I worked on with Lockheed Martin was extraordinarily sophisticated and well managed and helped me learn how to break down a major technical challenge into solvable pieces through a strong systems engineering approach. My time at Insitu was incredible and taught me about scaling up a company from the early adopter stage to broad adoption. Insitu grew about 8x while I was there, and the efforts and leadership it took to accomplish that

How have you seen attitudes and approaches to drone technology change over the years? Has the hype associated with the technology ultimately been more helpful or hurtful in terms of practical adoption?

I take the long view on this. Unmanned systems have been flying for decades, and over that span of time, drones and autonomy

I’m excited that so many people are now using, developing, and thinking about drones and related technology, and that energy helps make these systems better. There have been some groups who have promised far more than they could deliver, and that hurts adoption and sets the industry back, but I think those are just short-term issues. In the long run, if the systems we make deliver the benefits we promise, then adoption will continue to grow.

What are some of the measurable ways in which you’ve seen your solution make a difference to your clients? Is that more about

Our systems are making a difference in all those ways, measured by enhanced safety, increased efficiency and reduced costs.

For some clients, our ability to quickly find and share risks means that people are kept out of harm’s way, and responders can focus on their response rather than the search. For others, our ability to collect a higher resolution dataset compared to satellites, from a low-cost air vehicle, enables new applications that were not viable previously. Another measure is search time for a missing person in a maritime rescue situation compared to using a standard video gimbal, and we’ve demonstrated a dramatic improvement in this area, which benefits safety, efficiency

Tell us about some of the challenges that you’ve seen people run into when it comes to processing data.

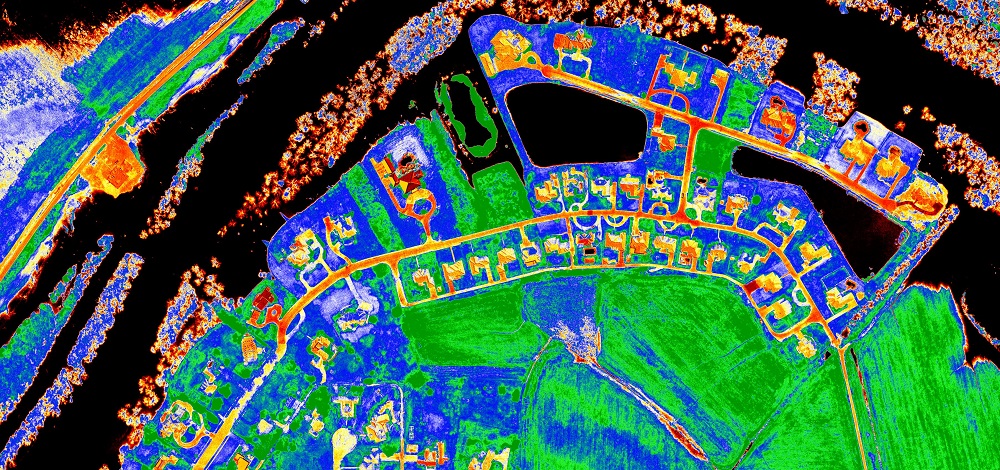

In the applications we support,  Overwatch Imaging enables real-time visualization of flood onset conditions through large-area multispectral index imagery (black indicates surface water)

Overwatch Imaging enables real-time visualization of flood onset conditions through large-area multispectral index imagery (black indicates surface water)

What does it mean to automatically process data in large-scale, time-critical environments? I imagine doing so is the only way the technology can make sense in certain environments, correct?

In our ideal product scenario, our users never see raw data and we don’t even need to move that raw data from the airborne system. We reduce the data into information, like pins and lines on a map, directly in the imaging system in the air, in real-time. This changes the constraints on an imaging system in really important ways.

Since we’re auto-processing the data, we can collect imagery in a way that is optimized for processing rather than for human viewing, with higher resolution and extra spectral bands that are hard for people to visually process, and with no dwell time in any particular area. Since we’re reducing the data

Have you seen users make a transition from manually processing data to automatically processing this same information? Is that a sensible approach, or do users sometimes create “bad habits” with a manual process that can’t be broken when transitioning to an automatic process?

We sometimes see operators try to manually find small anomalies in large areas, using our imagery or using video gimbals, and it makes me

We do see some resistance to automating the search role, and we always need to prove ourselves, but the differences between manual approaches and our automated approach

Let’s talk about a few of the specific ways your solution is being applied. What can you tell us about your work to support

We’re providing information to NOAA for river and flood forecasting, operating on BVLOS drones from Mississippi State University’s Raspet Flight Research Lab. It’s really a neat application and has a bright future. In this application, we provide multispectral imagery and vector products – lines on a map – that show the location and condition of water in rivers – to river forecasters, over river-scale areas, in real-time. This work uses our Earthwatch automated land surveillance system.

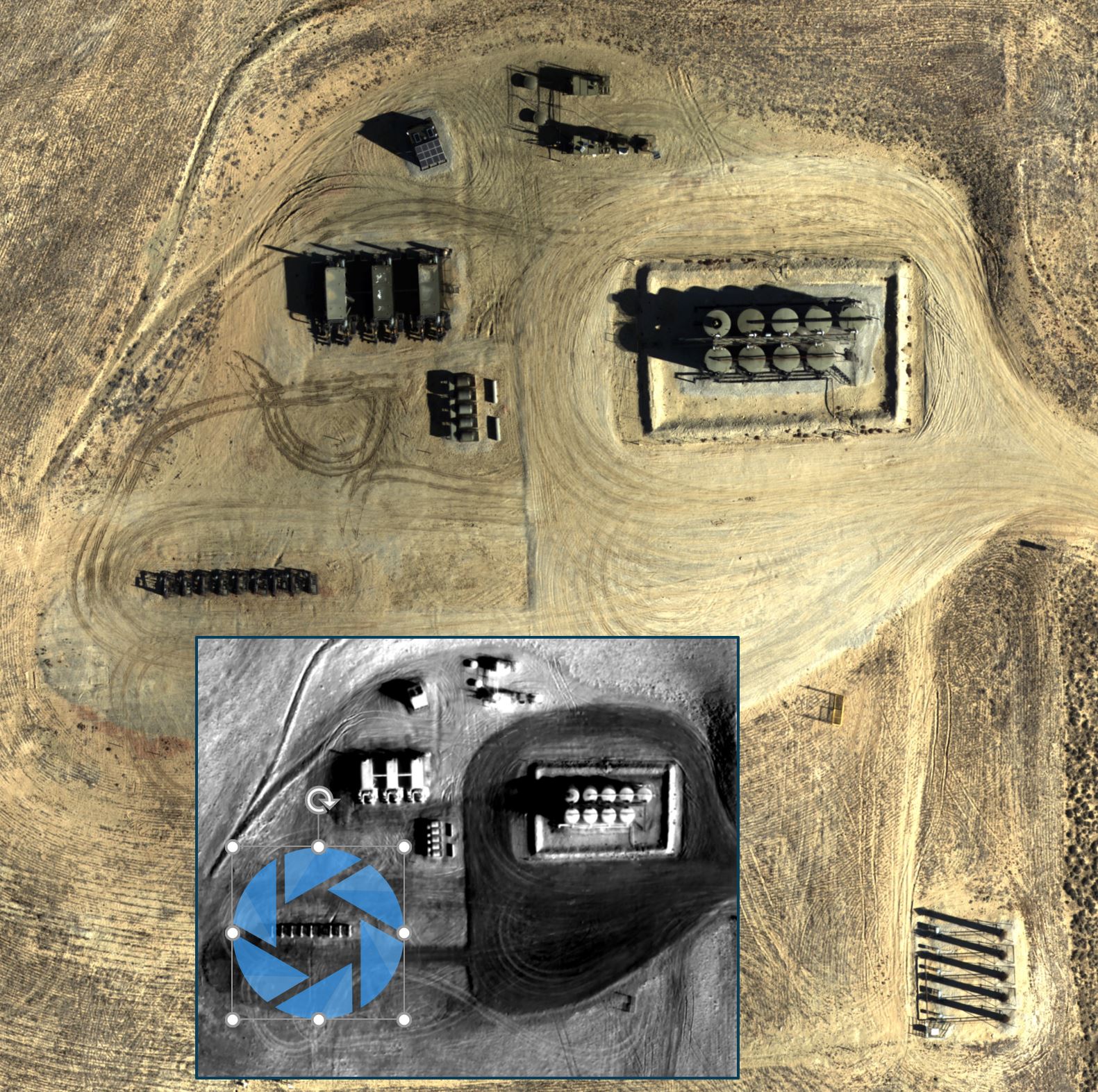

Overwatch Imaging enables automated remote inspection of large-scale infrastructure sites for the oil and gas industry (thermal detection of well site anomaly shown)

The work you’re doing in Europe with Schiebel for maritime safety is pretty exciting. Can you tell us about their traditional approach and what they can do now thanks to your solutions?

We’re delighted to see our maritime search system,

Could this technology change expectations across maritime safety?

Absolutely. Every maritime patrol aircraft should use an automated search sensor because looking for small things in huge bodies of water is simply a job better suited to computers than humans.

The maritime environment is challenging for many reasons, one of which is that it’s so big. To effectively scan big areas, systems need to fly high and move fast, but that makes seeing things harder. It’s the classic example of what we call the soda straw problem: if operators look through the soda straw of binoculars or a zoomed video camera, they can’t see enough area, but if they zoom out, they can’t see the objects in the water.

What can you tell us about the work you’re doing for oilfield extraction site surveillance that is currently being done in a massive area in the middle east?

I can’t tell much, because the system integrator and the end-user do not publicly discuss the solution. We provide high-resolution image maps of a very large area and look for critical anomalies that could pose an immediate safety or security risk. The end users previously used satellite data for this role, but could not acquire space-based datasets with the right resolution and time of collection that they needed.

I like this project because we’re delivering the value of satellites and small drones simultaneously.

How do you see the lessons learned from these use cases being applied elsewhere? How many of the differences you’ve helped enable are specific to a client or region or industry?

We think of our core competency and core competitive advantage as an autonomous imaging system architecture, rather than any particular product or analytics module. Each project we accomplish allows us to refine our core architecture technology and core software, which makes all our future systems better even if they are in different use cases.

Some of our software analytics are quite specific to an industry, and some of our imaging payloads are uniquely tailored to integrate into different unmanned systems, but the core building blocks continue to mature as we incorporate lessons learned from each case.

What are some of the trends or developments with the technology that you’re looking forward to seeing take shape in the near future?

I firmly believe that autonomy with data processing is the key that will unlock a much broader market of application areas. We love seeing the advancements in GPU processing and deep learning software frameworks because these allow us to do more for our clients right away.

We’re proud that we are applying artificial intelligence and autonomous data collection in the real world, today. But there is so much more to do, in terms of automating the data analytics, to realize the full potential of unmanned systems, so I’m really looking forward to that.

If I’m someone that thinks Overwatch Imaging might be a fit for my organization or business, but I’m not sure what it means to best explore my options, what’s my best first step?

We’re passionate about airborne imaging and automation, and we’ve seen the industry from many angles over a long period of time, so we’re always happy to chat about ideas and potential applications. Our business is comfortable customizing our core technology to build imaging systems for new applications and different host aircraft. If someone is thinking about working with us, he or she should contact us through our website, and we’ll arrange an introductory discussion to test the fit.

To see what else Greg has to say about the future of drone technology along with insights around what needs to happen in the present to see these visions realized, attend his “Drone Industry Visionaries” session at the Commercial UAV Expo, taking place October 28-30 in Las Vegas.

Comments