Many people have trouble interpreting infrared, or thermal, images captured by an industrial drone. They don’t understand what they’re seeing, and thus do not get the most out of their investment in thermal technology.

This isn’t because thermal imaging is incomprehensibly complex, but because it is a fundamentally different way of experiencing the world compared to what we see with our eyes.

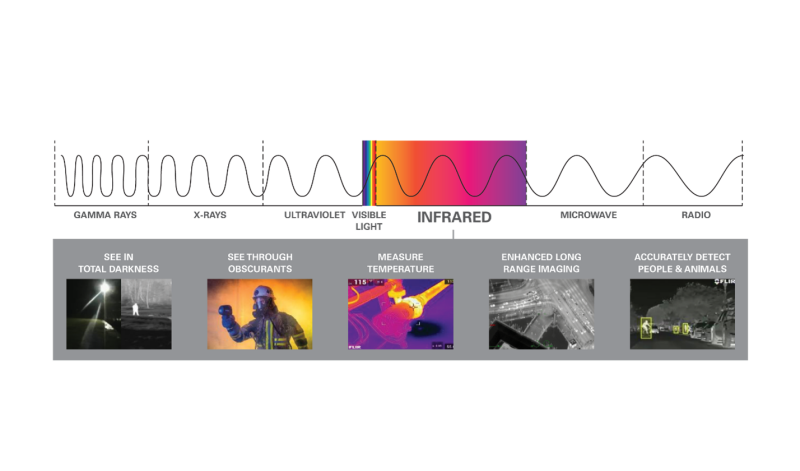

The first thing to get comfortable with is the fact that infrared cameras see a different type of energy than you see with your eyes. Your eyes—and your typical drone camera—see visible light, while a thermal camera sees infrared energy.

According to Kelly Broadbeck, UAS product manager at Teledyne FLIR, “while visible and infrared are both parts of the electromagnetic spectrum, what differentiates them is their wavelength. Electromagnetic energy travels in waves, so the wavelength is the physical distance from the peak of one wave to the peak of the next.”

In the infrared spectrum, these wavelengths are typically measured in micrometers, or a millionth of a meter. Visible light spans the waveband from 0.4-0.75 micrometers (often shortened to “micron”), while the infrared energy detected by most drone thermal cameras spans the 7.5-to-14-micron waveband.

These points provide the basis for some fundamental facts people need to understand when getting into thermal imaging. First, a given detector is only sensitive to—and can only see—a certain range of wavelengths. “Your eyes and your typical drone camera are sensitive to and see visible light in the 0.4-0.75-micron waveband,” Broadbeck explained. “Infrared energy’s wavelengths are much too long for our eyes to see—that’s why thermal cameras can ‘see the invisible.’”

Next, the different wavelengths within the visible light waveband (often depicted as the colors of the rainbow) are interpreted by our eyes as that range of colors. Shorter wavelengths are the blues and violets, while the longer wavelengths are on the orange and red end of the spectrum. Because thermal imagers don’t detect visible light, they don’t detect color. Remember: color is a function of the visible light we see with our eyes, and infrared energy is invisible to our eyes.

Next, the different wavelengths within the visible light waveband (often depicted as the colors of the rainbow) are interpreted by our eyes as that range of colors. Shorter wavelengths are the blues and violets, while the longer wavelengths are on the orange and red end of the spectrum. Because thermal imagers don’t detect visible light, they don’t detect color. Remember: color is a function of the visible light we see with our eyes, and infrared energy is invisible to our eyes.

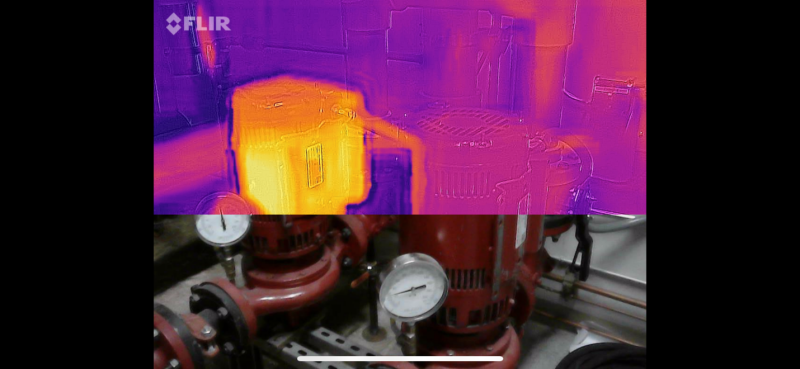

Because of this, we must assign false colors, shown as different color palettes in your thermal camera, to the varying intensities of infrared energy detected by the camera. That’s what you’re seeing with a thermal camera: varying intensities of infrared energy that come (mostly) from the surface of the object you’re looking at.

There are a couple of significant differences between visible light and infrared energy— we can see visible light, but we can’t see infrared; and the wavelengths within visible light equate to different colors, while infrared can’t see color at all. If you can start by remembering that a thermal camera is detecting and displaying differences in intensities of infrared energy, you’ve made a big step towards understanding infrared imaging.

Heat vs. Temperature

Another problem in interpreting infrared images is that many people confuse heat energy with temperature. They are not the same thing. When something looks “hot” to your thermal camera, that just means it’s giving off more heat energy – it may be a higher temperature, and it may not.

All of the molecules that make up everything on earth are oscillating,” Broadbeck explained. “As you add heat energy to a substance its molecules will oscillate faster, creating more friction between molecules, and therefore increasing the substance’s temperature. What we see with a thermal camera is the amount of heat energy coming off something, not its temperature.

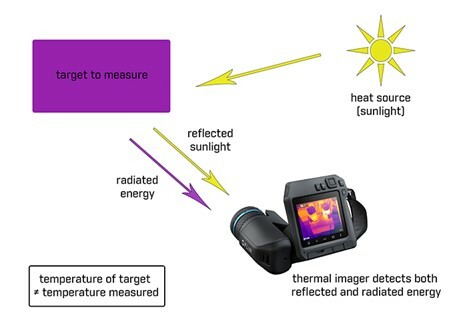

Temperature can be thought of as the result of having more or less heat energy in a substance – if we add heat energy, temperature will go up; if we remove energy, temperature will go down. Because our cameras detect heat energy, we have to remember that the temperature values we see on an infrared image are calculated, not detected. And those calculations are impacted by a few very important variables that not only influence how hot something looks, but its measurement as well.

What Makes Something Look Hot or Cold?

For the most part, the infrared energy we see coming from an object is coming from the object’s surface; but that energy isn’t necessarily coming from the object itself. It could be coming from the object, but it could also be reflected off the object, passing through the object, or a combination of all three of these. In order to properly interpret an image and measure the temperatures contained within it, we first have to understand where the energy we’re seeing is coming from.

Energy that is coming from an object directly is called emitted energy. “This is energy contained in the object and being radiated from it,” stated Broadbeck. “Reflected energy—just like you’re used to seeing with your eyes—is thermal energy that originated from something else but bounces off the object you’re looking at and into your camera.”

When energy from something behind the object you’re looking at passes through your object of interest, that material is said to be “transmissive.” That means, the energy you’re seeing is being transmitted from your object of interest, not emitted from it. Everything you see in an infrared image is some combination of emitted, reflected, and/or transmitted energy. This relationship can be shown mathematically like this: Emitted + Reflected + Transmitted = 1 (with one representing 100 percent of the infrared energy being seen).

The good news is that relatively few things are significantly transmissive to longwave infrared energy, so the vast majority of things you’ll see are a combination of emitted and reflected energy (Emitted + Reflected = 1). The bad news is that an object’s transmissivity to infrared energy can be exactly opposite of what you’d expect if you’re still thinking of visible light.

Thin film plastics like tarps and garbage bags are highly transmissive to infrared—so you can see through them with a thermal camera—but they are opaque to visible light. You can’t see through them with your eyes. Conversely, normal window glass is highly transmissive to visible light—or they wouldn’t make very good windows—but they are almost entirely opaque to infrared.

These properties often trip up new operators: not only can they not see-through glass with an infrared camera, often they will see reflections in the glass in infrared. To properly interpret a thermal image, an operator must know what they what you’re looking at and understand its thermal properties in order to understand if the energy that looks like it’s coming from that object actually is.

This is equally true whether an operator is trying to understand which things are actually hot in an image (called a “qualitative” inspection), or if they are trying to get actual temperature measurements (called a “quantitative” inspection).

How Hot is “Hot”?

The measure of how efficient an object is at radiating its heat is called its emissivity. Emissivity is a ratio of the energy in an object compared to how much energy it’s giving off, and these ratios are shown as values between 0 and 1.0. Therefore, an object that is 90 percent emissive has an emissivity of 0.9.

“Remember that everything you see is some combination of emissive and reflective,” Broadbeck said. “So, if an object is 90 percent emissive, it is 10 percent reflective.”

How emissive or reflective an object will be determined by the following six things, in decreasing order of importance:

- Material – Emissivity is first and foremost a material property. What the thing is made of will be the largest driver of how efficiently it gives off its energy. Generally speaking, organic objects – dirt, rocks, wood, animals (including people) are highly emissive; often having emissivity of greater than 0.95.

- Surface finish – The smoother and shinier an object is, the lower its emissivity – and therefore the higher its reflectivity – will be. For instance, if you take a rough piece of wood and polish it smooth it will lower its naturally high emissivity and raise its reflectance. Conversely, shiny metals are naturally very reflective, but once they corrode, they become more emissive.

- Viewing angle – If you look at a high emissivity object from too shallow an angle, it will become more reflective. This is especially important for drone operators because we can easily change the tilt angle of our thermal cameras. Make sure you’re looking at something at as close to a 90-degree angle as possible relative to the surface to minimize reflections.

- Geometry – Objects with lots of holes and angles in them can appear hotter than they really are because of those changes in geometry.

When measuring temperatures with a thermal camera, doing a quantitative inspection, all of the above variables need to be compensated for if you want to generate what’s called a true temperature.

In the weeks to come, watch Commercial UAV News for more articles in this series.

Comments